django rest framework , django , aws , s3

In this third and final blog of 3 part series, We will configure our Django application with Amazon S3 configuration to store and serve static and media files.

Table of contents

- What is Amazon S3? (Part 1)

- Building a small Django application for uploading images (Part 1)

- Create an S3 Bucket (Part 2)

- AWS IAM (Identity and Access Management) (Part 2)

- Configure our Django app with AWS settings

- Summary

Configure our Django app with AWS settings

1. Install the following packages

pip install boto3 pip install django-storages pip install python-decouple

2. Boto3 is the AWS SDK for Python to create, configure and manage AWS services. Django-storages is a collection of custom storage backends for the Django framework.

3. For security reasons never put your secret keys in settings.py. Create a .env or .ini file store all the secret configurations inside it and read from that file. And when it's time for production, nothing in your code changes. Just define your sensitive data in the production environment. Python-decouple is for reading sensitive data from a .env or .ini file

4. Add the following configuration in settings.py

INSTALLED_APPS = [

...

"storages",

]

AWS_ACCESS_KEY_ID = <YOUR_AWS_ACCESS_KEY_ID>

AWS_SECRET_ACCESS_KEY = <YOUR_AWS_SECRET_ACCESS_KEY >

AWS_STORAGE_BUCKET_NAME = <YOUR_AWS_STORAGE_BUCKET_NAME >

AWS_S3_REGION_NAME = <YOUR_AWS_S3_REGION_NAME>

AWS_S3_SIGNATURE_VERSION = "s3v4"

AWS_LOCATION = "static"

AWS_S3_ADDRESSING_STYLE = "virtual"

AWS_S3_FILE_OVERWRITE = False

AWS_DEFAULT_ACL = private

AWS_S3_VERIFY = True

STATICFILES_STORAGE = "storages.backends.s3boto3.S3Boto3Storage"

DEFAULT_FILE_STORAGE = "app.storages.MediaStorage"

5. Here is a little explanation of the AWS configuration we used in our Django application. If you want to learn more about AWS configuration, here is Django Storages documentation link.

AWS_ACCESS_KEY_ID => Your Amazon Web Services access key as a string

AWS_SECRET_ACCESS_KEY => Your Amazon Web Services secret access key, as a string.

AWS_STORAGE_BUCKET_NAME => Your Amazon Web Services storage bucket name, as a string.

AWS_S3_REGINON_NAME => Name of the AWS S3 region to use (eg. ap-south-1).

AWS_S3_SIGNATURE_VERSION => As of boto3 version 1.13.21 the default signature version used for generating presigned urls is still v2. To be able to access your s3 objects in all regions through presigned urls, explicitly set this to s3v4.

AWS_LOCATION => A path prefix that will be prepended to all uploads.

AWS_S3_ADDRESSING_STYLE => Possible values virtual and path. Default is None.

AWS_S3_FILE_OVERWRITE => By default files with the same name will overwrite each other. Set this to False to have extra characters appended.

AWS_DEFAULT_ACL => default is None which means the file will be private per Amazon’s default. Use this to set an ACL on your file such as public-read. If not set the file will be private per Amazon’s default.

AWS_S3_VERIFY => Whether or not to verify the connection to S3. Can be set to False to not verify certificates or a path to a CA cert bundle.

6. Create a storages.py file in the Django project and add the following code. This code suggests creating a media folder inside an s3 bucket and storing media files inside it.

from storages.backends.s3boto3 import S3Boto3Storage

class MediaStorage(S3Boto3Storage):

location = "media"

file_overwrite = False

7. Start the development server by executing the command below:

python manage.py runserver

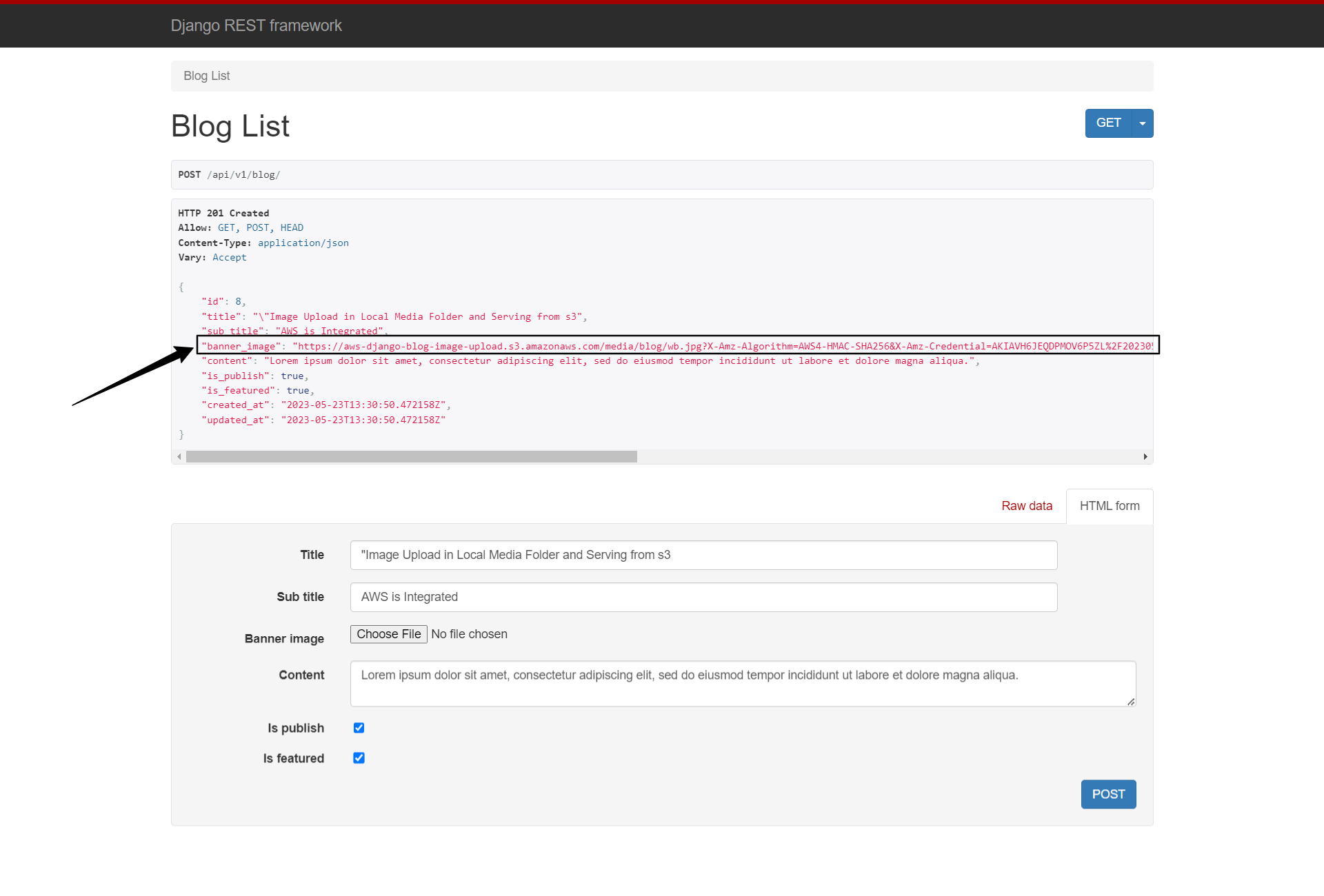

8. When we hit the Post API endpoint http://localhost:8000/api/v1/blog/ using Django BrowsableAPI inside any browser with appropriate data, we get the following output:

Look at a black rectangle, banner_image is stored and served from our S3 Bucket.

Summary

A full working project can be found on GitHub in the Django and AWS S3 repository.

Oxvsys Automation has expertise in AWS S3 and a deep understanding of various cloud technologies. We have extensive hands-on experience working with AWS S3, the cloud storage service offered by Amazon Web Services.

Oxvsys has not only utilized AWS S3 for storing and managing assets but has also written a series of insightful blog posts on various cloud technologies. Our blogs cover a wide range of topics related to cloud computing, including AWS S3 best practices, data management strategies, cost optimization techniques, and integration with other AWS services.

Contact us today or schedule a call via Calendly

Stay tuned to get the latest innovation from Oxvsys and happy automation.